How to optimise for AI visibility

7 minute read ● Summarise this post with ChatGPT

Optimising for large language models (LLMs) has become a major topic in digital marketing over the past year. As tools like ChatGPT, Perplexity, Copilot and Google's AI Overviews and AI Mode increasingly shape how people find information online, many industry commentators have suggested that achieving visibility in AI-generated results is simply an extension of traditional SEO.

There's some truth to this, but the way LLMs retrieve and use content means visibility in AI results also requires some new tactics, and certain traditional SEO techniques take on new importance. Understanding these differences is key to making your content visible in AI-generated answers. Some in the industry are now calling this generative engine optimisation, or GEO.

LLM optimisation is still evolving

Much of what we now understand comes from research and experimentation by industry experts like Mike King and Josh Blyskal. Many of the recommendations in this post are drawn from Mike's recent webinar with Rand Fishkin for SparkToro on optimising for LLMs, which is well worth watching.

Before we dive into the key recommendations for AI visibility, it's worth noting that this discipline is still evolving. AI platforms will continue to change how they surface information, just as Google's algorithms have evolved over the past two decades. What follows reflects the most reliable understanding we have at this point in time.

High rankings in classic search do not guarantee AI visibility

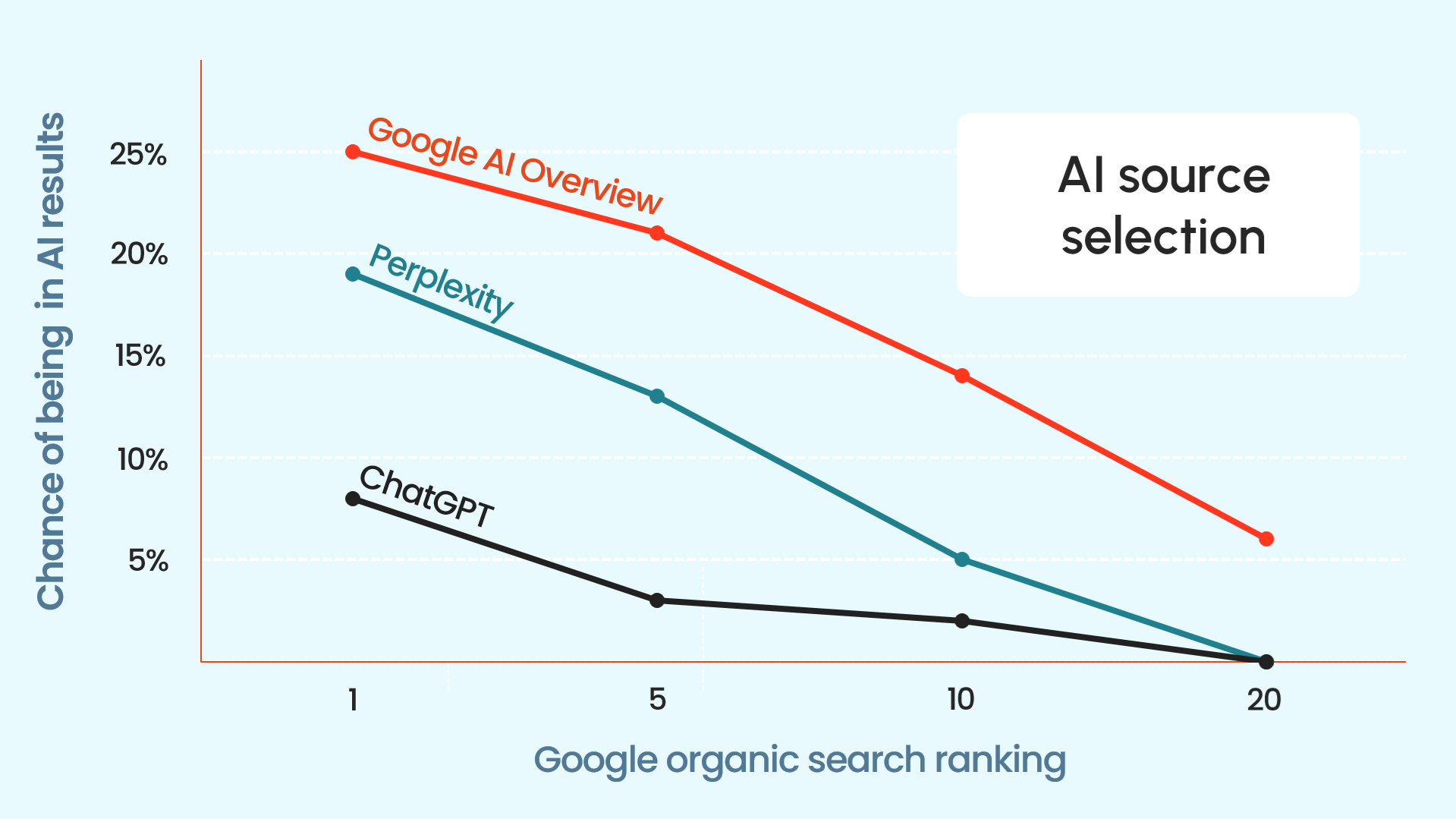

One of the clearest indicators that LLM optimisation differs from traditional SEO comes from recent data shared by AI analytics platform, Ziptie.

Their analysis shows that even a number one ranking in Google's classic organic search results only gives a brand about a 25% chance of being selected in an AI-generated answer for the same query.

In other words, strong performance in search rankings offers limited influence over whether an LLM will use your content. This is because LLMs don't index and rank information in the same way as traditional search engines. They selectively pull content, assess smaller sections of information, and build answers using material from multiple sources.

To optimise effectively for AI visibility, it helps to understand this process.

How LLMs retrieve and use content

Most AI platforms gather information in two stages. First, they select which sources to fetch content from, then they break that content down into small usable pieces to form an answer.

Selecting what to fetch

Before some LLMs read your content, they first decide if your page is even worth exploring. This stage relies heavily on page-level signals like page titles, meta descriptions, URLs, structured data, and technical accessibility. These things act as a relevance and trust filter - basically they're your introduction to the LLM.

While metadata like this plays some role in traditional SEO, it has a much bigger impact on whether AI platforms use your content. For many LLMs, these signals are assessed before any deeper content review takes place. So if your metadata doesn't clearly communicate what the page covers and why it's relevant, the content may never be retrieved.

Extracting usable information

Once a page is retrieved, the AI breaks it into smaller sections - a process known as content chunking - and evaluates each section for clarity, relevance, and usefulness. It then combines the strongest sections from that page, and other sources, to form the answer to a query.

So where traditional SEO focuses on matching a query to the most relevant page, LLMs explore a topic more broadly and draw on content from multiple sources. When someone asks a question, the AI automatically expands it into related sub-questions - a process known as query fan-out - exploring the wider topic rather than searching for one best answer.

For example, a question like "Is remote working better than office working?" may lead the AI to look for related information like remote versus office working productivity, employee satisfaction, collaboration challenges, company culture, mental health impact, and cost savings. It then pulls relevant sections from different sources and combines them into a single answer.

So AI visibility comes from being useful across a web of related questions. Each well-written section of your content becomes a chance to be included in the AI-generated answer.

Not all systems work the same way

While the two-stage process above describes how most AI platforms work, it's worth noting that not all systems do things the same way. Some fetch content in real time, others use specialised search indexes, and some rely primarily on their training data.

This means optimising for AI visibility is about making your content clear, structured, and accessible across different platforms.

Content chunking and extractable answers

Because AI works at an individual section level, rather than a whole page level, content structure is critically important. Chunking content, by organising pages into clearly defined sections so each section focuses on one area, is now one of the most effective on-site techniques for AI visibility.

What this means in practice is that LLM-friendly content typically includes:

Clear, descriptive headings (often phrased as questions)

Focused paragraphs that address one area at a time

Lists and comparisons where helpful

Direct explanations and definitions

So instead of burying insights within long paragraphs, it's far better to separate and explicitly answer related questions. Building on our previous example, this could be questions like: What impact does remote work have on productivity? How does remote work affect employee satisfaction and wellbeing? What challenges do remote teams face around collaboration and communication? How does remote work influence company culture? Are there cost savings for businesses and employees?

Doing this creates clean, easy-to-use chunks of content - making content simpler for people to browse and, importantly, far easier for LLMs to retrieve and use in answers.

From keyword targeting to topic mastery

Traditional SEO strategies focus on identifying specific keywords and building pages to rank for each one. But LLMs instead reward a strong understanding of a whole topic. They will surface content that explains subjects thoroughly and covers related questions naturally.

This often means a single authoritative, well-structured resource can outperform dozens of narrowly focused pages.

So optimising for LLMs moves the approach from "What keyword should this page rank for?" to "Does this content fully answer the questions someone would have about this topic?".

That means topic depth, clarity and completeness increasingly matter for AI visibility.

Technical accessibility remains essential

In traditional SEO, technical accessibility has always been critical for making content discoverable. The same is true for LLM optimisation - if your content can't be easily accessed and read, it won't appear in AI-generated answers.

If your content isn't clearly visible in the underlying code, is blocked by robots, is behind login pages, or is slow to load, AI platforms may never access it.

The principles remain the same, but the stakes are different. Where traditional search might still surface your page despite some technical issues, LLMs are more likely to skip it entirely and move to a more accessible source.

Your brand's wider presence informs LLMs

LLMs don't just rely on your website to understand your brand. They learn from other available sources, with platforms like Reddit and YouTube currently among those most referenced by LLMs for brand information.

Content away from your website - discussions, reviews, community posts, videos and expert commentary - all help LLMs understand your reputation, expertise, product or service quality, and customer experiences.

If your website tells one story but the wider web tells another, the AI will usually follow the broader consensus.

These brand mentions across the wider web are a form of authority building. Where backlinks act as a trust signal in traditional SEO, consistent positive brand presence across influential platforms plays a similar role in LLM optimisation.

AI visibility builds awareness, not traffic

When considering LLM optimisation, it's important to understand what AI visibility delivers compared to classic search rankings.

Appearing in traditional search results typically drives website visits. However, AI-generated answers work differently.

In most cases, users get the information they need directly from the AI response and never click through to the original source. This is explored in more detail in my previous blog post, and is reinforced by Mike King and Rand Fishkin in their recent SparkToro webinar:

The value of LLM visibility is therefore user influence rather than user acquisition. Strong presence in AI results helps to:

Build brand recognition

Establish authority in your industry

Shape perception and trust

Success is measured by how your brand is contributing to answers - not by clicks through to your website.

You can't avoid AI-generated answers about your brand

One final point worth highlighting is this - LLMs will answer questions about your business, whether you publish content in relation to that question or not.

A simple example is when a business avoids publishing pricing information on its website, hoping potential customers will get in touch directly to find out more. When people then ask AI tools questions like "How much does this company charge?" or "Are there any hidden fees?" the AI will often pull answers from reviews, forums, or social posts instead.

This creates the risk of inaccurate information, outdated details, and negative framing.

Publishing transparent, authoritative content allows you to shape what AI systems rely on, rather than leaving your brand narrative entirely in the hands of external sources.

Summary

In summary, while traditional SEO techniques remain essential for making content accessible and discoverable, appearing in AI results requires additional optimisation focused on retrieval, structure, topic depth and off-site signals.

LLMs retrieve and rank content differently to search engines, evaluating information at a section level and assembling answers from multiple sources. Metadata influences what gets pulled in, content structure influences what gets used, and strong topic coverage increasingly outweighs narrow keyword targeting.

At the same time, brand presence across the wider web now plays a major role in shaping AI outputs, while visibility itself delivers influence and trust rather than direct traffic.

As AI-driven search continues to evolve, one thing is already clear - ranking well in classic search results alone is not enough to ensure discoverability across AI platforms.